- AI Dev and Research News

- Posts

- Marktechpost AI Newsletter: 12 Trending LLM Leaderboards + Are AI-RAG Solutions Really Hallucination-Free? + HuggingFace Releases 🍷 FineWeb + LLM360 Introduces K2 and many more....

Marktechpost AI Newsletter: 12 Trending LLM Leaderboards + Are AI-RAG Solutions Really Hallucination-Free? + HuggingFace Releases 🍷 FineWeb + LLM360 Introduces K2 and many more....

Marktechpost AI Newsletter: 12 Trending LLM Leaderboards + Are AI-RAG Solutions Really Hallucination-Free? + HuggingFace Releases 🍷 FineWeb + LLM360 Introduces K2 and many more....

Want to get in front of 1.5 Million AI enthusiasts? Work with us here

Featured Research..

Top 12 Trending LLM Leaderboards: A Guide to Leading AI Models’ Evaluation

With numerous LLMs and chatbots emerging weekly, it’s challenging to discern genuine advancements from hype. The Open LLM Leaderboard addresses this by using the Eleuther AI-Language Model Evaluation Harness to benchmark models across six tasks: AI2 Reasoning Challenge, HellaSwag, MMLU, TruthfulQA, Winogrande, and GSM8k. These benchmarks test various reasoning and general knowledge skills. Detailed numerical results and model specifics are available on Hugging Face.

Text embeddings are often evaluated on a limited set of datasets from a single task, failing to account for their applicability to other tasks like clustering or reranking. This lack of comprehensive evaluation hinders progress tracking in the field. The Massive Text Embedding Benchmark (MTEB) addresses this issue by spanning eight embedding tasks across 58 datasets and 112 languages. Benchmarking 33 models, MTEB offers the most extensive evaluation of text embeddings. The findings reveal that no single text embedding method excels across all tasks, indicating the need for further development toward a universal text embedding method.

The SEAL Leaderboards utilize Elo-scale rankings to compare model performance across datasets. Human evaluators rate model responses to prompts, with ratings determining which model wins, loses, or ties. The Bradley-Terry model is used for the maximum likelihood estimation of BT coefficients, and the binary cross-entropy loss is minimized. Rankings are based on average scores and win rates across multiple languages, with bootstrapping applied to estimate confidence intervals. This methodology ensures comprehensive and reliable model performance evaluation. Key models are queried from various APIs, providing up-to-date and relevant comparisons.

Editor’s Picks…

Are AI-RAG Solutions Really Hallucination-Free? Researchers at Stanford University Assess the Reliability of AI in Legal Research: Hallucinations and Accuracy Challenges

The Stanford and Yale University research team introduced a comprehensive empirical evaluation of AI-driven legal research tools. This evaluation involved a preregistered dataset designed to assess these tools’ performance systematically. The study focused on tools developed by LexisNexis and Thomson Reuters, comparing their accuracy and incidence of hallucinations. The methodology involved using a RAG system, which integrates the retrieval of relevant legal documents with AI-generated responses, aiming to ground the AI’s outputs in authoritative sources. The evaluation framework included detailed criteria for identifying and categorizing hallucinations based on factual correctness and citation accuracy.

The proposed methodology involved using a RAG system. This system integrates the retrieval of relevant legal documents with AI-generated responses, aiming to ground the AI’s outputs in authoritative sources. The advantage of RAG is its ability to provide more detailed and accurate answers by drawing directly from retrieved texts. The study evaluated the performance of AI tools by LexisNexis, Thomson Reuters, and GPT-4, a general-purpose chatbot. The study’s results revealed that while the LexisNexis and Thomson Reuters AI tools reduced hallucinations compared to general-purpose chatbots like GPT-4, they still exhibited significant error rates. LexisNexis’ tool had a hallucination rate of 17%, while Thomson Reuters’ tools ranged between 17% and 33%. The study also documented variations in responsiveness and accuracy among the tools tested. LexisNexis’ tool was the highest-performing system, accurately answering 65% of queries. In contrast, Westlaw’s AI-assisted research was accurate 42% of the time but hallucinated nearly twice as often as the other legal tools tested.

ADVERTISEMENT

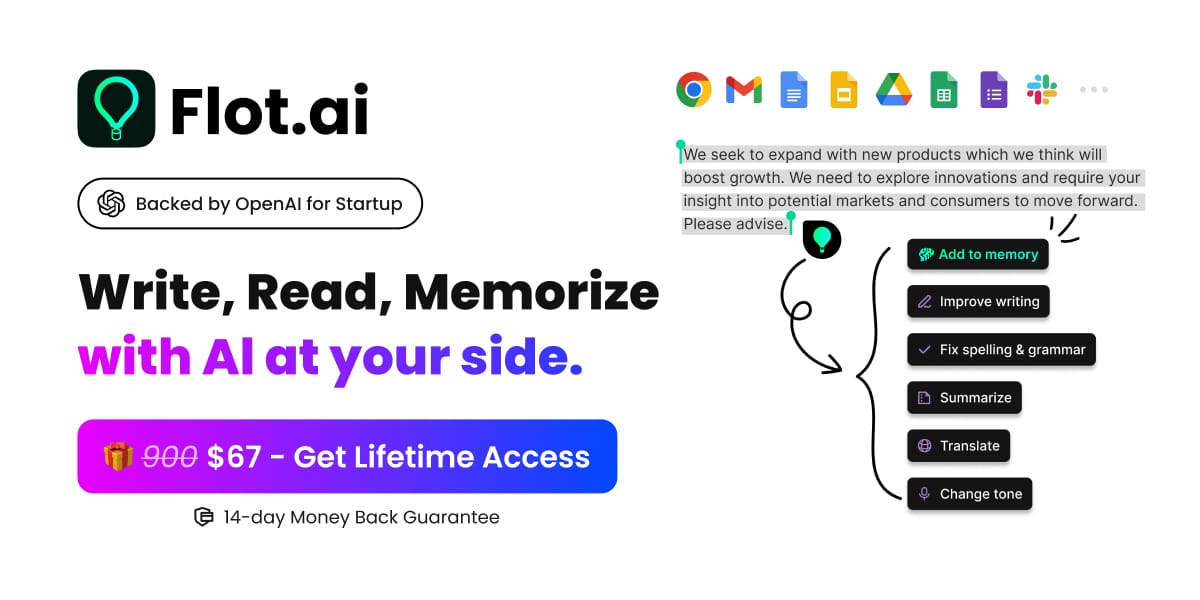

Your Everywhere and All-in-One AI Assistant

Imagine an AI companion that works across any website or app, helping you write better, read faster, and remember information. No more copying and pasting—everything is just one click away. Meet Flot AI!(Available on Windows and macOS)

HuggingFace Releases 🍷 FineWeb: A New Large-Scale (15-Trillion Tokens, 44TB Disk Space) Dataset for LLM Pretraining

Hugging Face has introduced 🍷 FineWeb, a comprehensive dataset designed to enhance the training of large language models (LLMs). Published on May 31, 2024, this dataset sets a new benchmark for pretraining LLMs, promising improved performance through meticulous data curation and innovative filtering techniques.

🍷 FineWeb draws from 96 CommonCrawl snapshots, encompassing a staggering 15 trillion tokens and occupying 44TB of disk space. CommonCrawl, a non-profit organization that has been archiving the web since 2007, provided the raw material for this dataset. Hugging Face leveraged these extensive web crawls to compile a rich and diverse dataset, aiming to surpass the capabilities of previous datasets like RefinedWeb and C4.

LLM360 Introduces K2: A Fully-Reproducible Open-Sourced Large Language Model Efficiently Surpassing Llama 2 70B with 35% Less Computational Power

This model, known as K2-65B, boasts 65 billion parameters and is fully reproducible, meaning all artifacts, including code, data, model checkpoints, and intermediate results, are open-sourced and accessible to the public. This level of transparency aims to demystify the training recipe used for similar models, such as Llama 2 70B and provides a clear insight into the development process and performance metrics.

The development of K2 was a collaborative effort among several prominent institutions: MBZUAI, Petuum, and LLM360. This collaboration leveraged the expertise and resources of these organizations to create a state-of-the-art language model that stands out for its performance and transparency. The model is available under the Apache 2.0 license, promoting widespread use and further development by the community.